Description

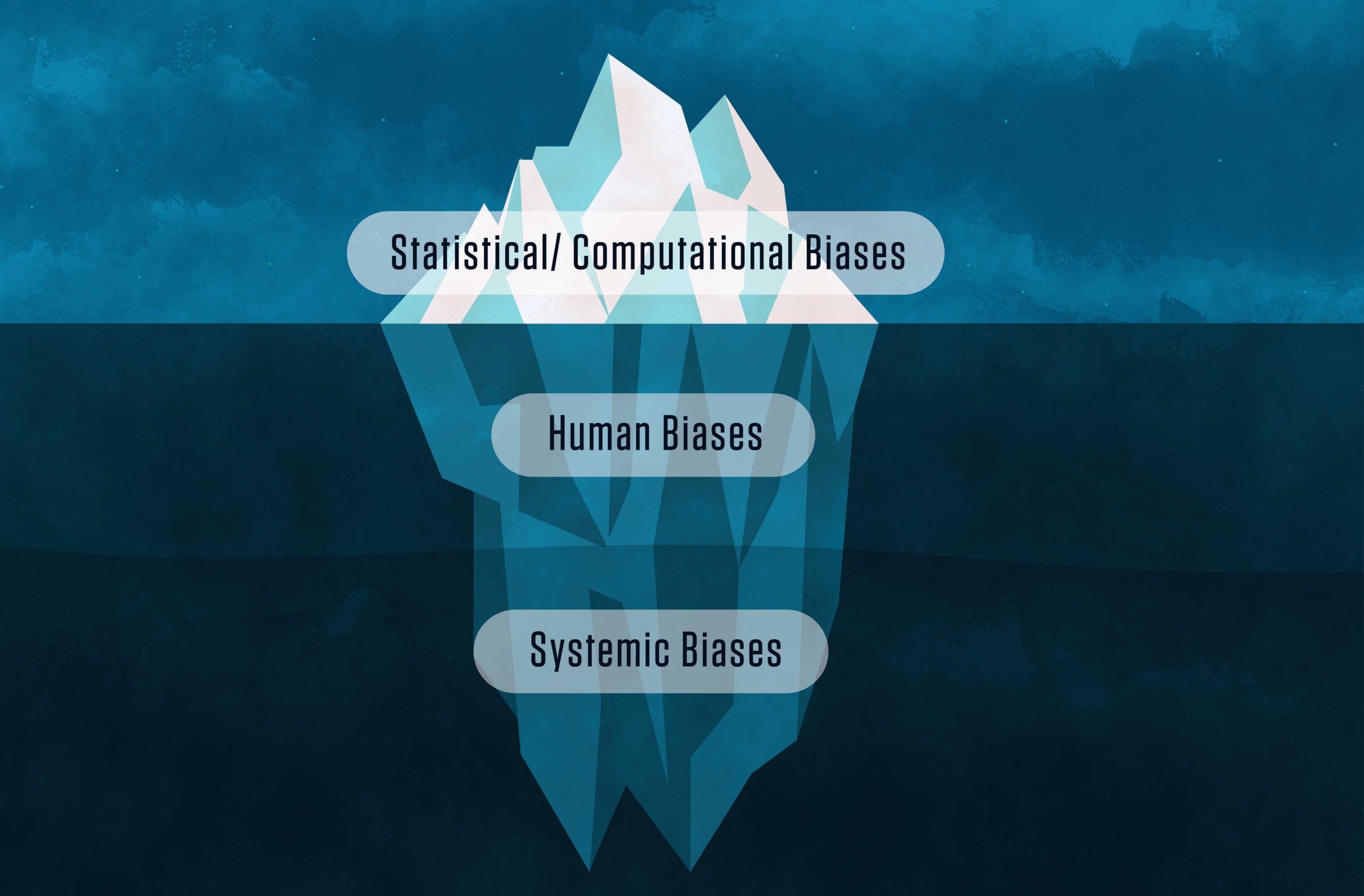

The Bias Detection Tool for AI Models is a comprehensive solution designed to identify, quantify, and mitigate algorithmic biases in machine learning and artificial intelligence systems. As organizations increasingly deploy AI to automate decision-making across industries such as finance, healthcare, education, and criminal justice, ensuring ethical and fair outcomes has become a top priority. This tool enables organizations to audit both datasets and models using fairness metrics like disparate impact ratio, equal opportunity difference, and statistical parity. It conducts subgroup performance analysis to detect underrepresented or unfairly treated demographic segments. Additionally, it inspects training data for imbalances, data drift, and label inconsistencies that may contribute to biased outputs. The tool provides interactive visualizations for stakeholders to explore the impact of different variables on model predictions. It can be integrated into MLOps pipelines, allowing for real-time bias checks before production deployment. The dashboard also suggests remediation techniques, such as re-weighting samples, fairness-aware retraining, or data augmentation strategies. Supporting compliance with regulations like the EU AI Act, GDPR, and EEOC guidelines, this tool acts as a critical layer in responsible AI governance frameworks. By fostering transparency, accountability, and fairness, it helps build trust in AI systems and reduce the risk of reputational or regulatory backlash.

Azunwena –

The bias detection tool has been instrumental in ensuring fairness in our AI models. Its comprehensive approach, covering various demographic factors and utilizing multiple fairness metrics, has allowed us to identify and mitigate potential biases we wouldn’t have otherwise detected. The insights gained through dataset inspection and performance parity checks have been invaluable in building more equitable and reliable AI solutions. This tool has significantly improved the integrity and trustworthiness of our models.

Kadijat –

This bias detection tool is incredibly valuable for ensuring fairness in our AI models. The comprehensive analysis across various demographic categories, coupled with its insightful fairness metrics and dataset inspection capabilities, has allowed us to proactively identify and mitigate potential biases. The performance parity checks provide an additional layer of confidence, ensuring that our models are equitable and responsible. It’s a crucial addition to our AI development pipeline.